When fitting the intensity profile data a Gaussian and logistic function are used. To begin with the data is quickly looked at to get the initial values of the parameters. The location of the Gaussian is found by approximating the derivative at each point in the data. Using the information about the derivative the location of all the peaks in the data can be found. Then the peaks are sorted from highest to lowest and the highest one is used as the height and location of the Gaussian peak. Then the lowest intensity valued point after the Gaussian is used as the beginning of the logistic function. The height of the flat part of the logistic is found by taking the average of the last twenty points of the data. The rest of the parameters of the data are found in a similar fashion. These initial parameters are reflected in the following graph where the data is shown in blue, the Gaussian function in yellow and the logistic function in pink.

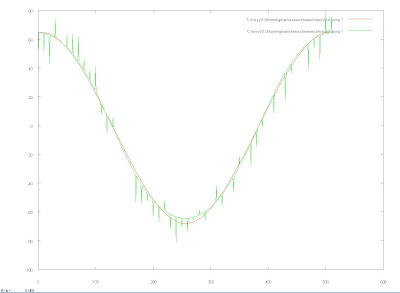

Once the initial parameters have been approximated using the previously described methods, they are optimized using the Levenberg-Marquardt algorithm using the implementation found in VNL by instantiating "vnl_levenberg_marquardt". The following graph represents the optimized parameters in the previous graph. Running the optimization widens the Gaussian, changed how steep the logistic function is, and adjusts the height of the flat part of the logistic. However, the location of the start of the logistic function and the position of the peak stays roughly the same.

In the current segmentation metric implemented in the itk::IntensityProfileMetric the each intensity profile is modeled and the location in between the Gaussian peak and the start of the logistic function,the brain-skull interface, is used as a reference to tell how far off the current intensity profile is from its optimal location. This means that optimizing the parameters does not have a huge impact on the information that is finally extracted from the intensity profiles. Therefore, when the fitting function gives a least-squares error to the fit, it does not strongly correlate to how confident the location of the brain-skull interface was approximated. Another issue that has an impact on the error is the area of the flat part of the Gaussian, which represents all of the folds in the brain matter. This area contributes a great deal to the error in the resulting fit. But the contribution to the error from this area can be misleading since if the brain matter is very stable the error will be lower although the other parameters could still be off by a significant amount. So the use of error could be factored into the segmentation process, but can produce incorrect information as shown Gaussian Scaled Metric post, where when the error was weighted the same amount as the difference in location of the brain-skull interface. What the first plot in that post illustrates is that once the error is factored in, the metric doesn't converge to an optimal value anymore, but instead creates a situation where the metric becomes very uniform and gradient decent will not be able to converge to an optimal solution.

Thus the use of error to weight the effects of each of the intensity profiles could be very deceiving in the final metric value and a method that looks at a neighborhood of the difference in location of the brain-skull interface of the intensity profile in question would be a more suitable alternative.